From October ’23

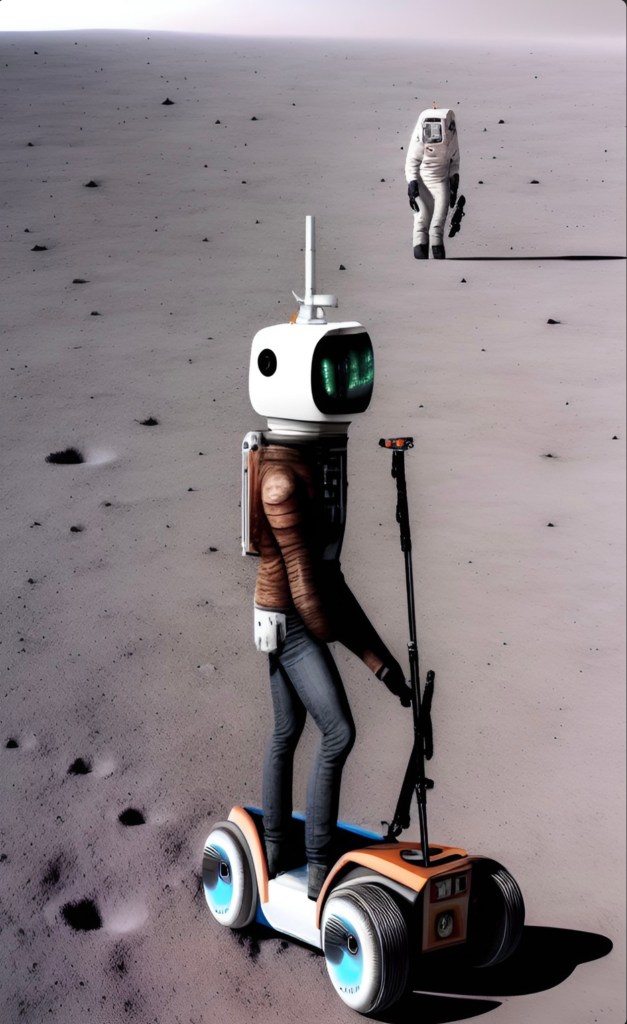

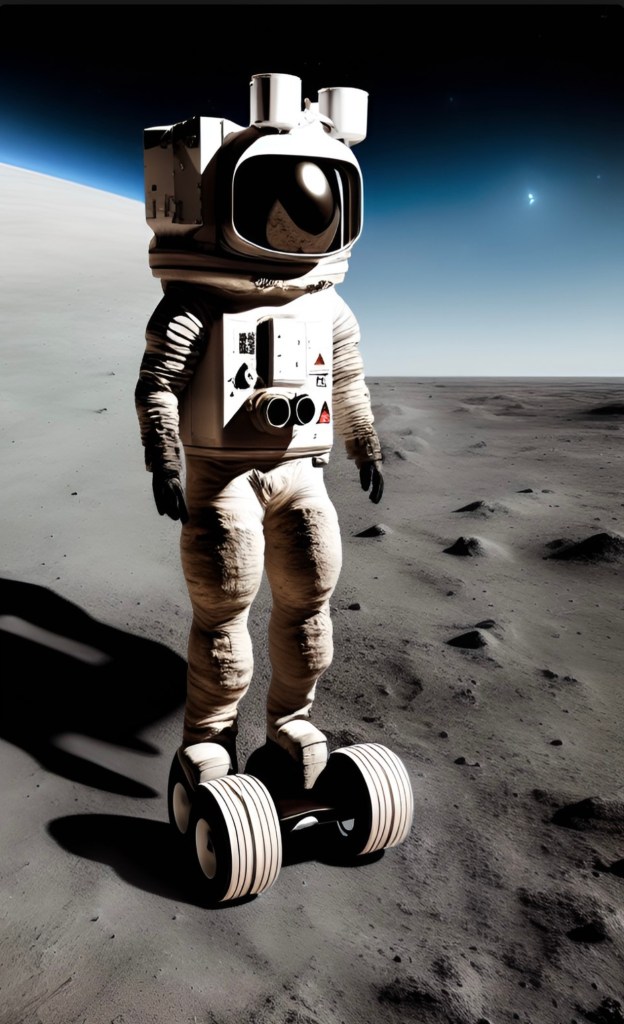

Back in Jan 2023 I somehow got it into my head that I should investigate or at least have a look at what all the fuss was about in relation to AI and Generative Image making. I didn’t understand it in the slightest and was less than impressed with the idea of instructing some new fangled software to make me an image of ‘X’. What was the point, I can draw, paint, print, photograph and work across a range of CGI software and digital compositing software. Why should i bother with AI? Somehow the wheel turned and I found myself thinking that I could tackle the beast head on and do something different with it. Being then rebel that I am it came to me that i should fashion prompts along the lines of creating problems for the AI software to solve. Otherwise it’s just same ol’ same ol’.

So down one rabbit hole after another to try and get to the bottom of how to go about setting my mischief into place. At the time the hot topic was intellectual property theft, mainly from photographers and digital artists. I knew enough to know that this was a bit of a dark horse and a furphy to boot, but it gave me the leg up that I needed. What kind of images / image data won’t have been picked by the web crawlers trawling online storage and social media platforms; in brief, what sort of images don’t exist. That got my imagination working and the first prompts were quickly being tested. The thing is, I want to be surprised, I don’t want the result to be expected or predictable and this is where I also found a working companion in GANN’s (Generative Adversarial Neural Networks). Despite the often (but not always) superior generative quality of software like Stable Diffusion, Dall-e and Mid Journey I found the results to be too predictable and often a bit lame when a particular ‘style’ was applied.

My journey begins with Wombo AI out of Canada. Here are a random selection of some of the earliest images from back in Jan 2023.

It’s been a while, a whole year nearly since posting to this site. A lot has happened some of which is worth talking about. It’s been the year of generative image making and ChatGPT. Myths and legends abound and for me it was a year to do some myth busting.

ChatGPT was my entry point. Through some extensive dialog with educators who had concerns about student usage and the supposed advantages thereof I found myself doing some system testing to see the extent of the application of generative text and to better understand the process. Leaving aside the standard “write me an essay about…….” I went straight for the throat and entered questions directly from HSC exam papers (relevant only to teachers in NSW Australia) What i discovered was that that ChatGPT struggled with contextualising the nature of the question and the response. Firstly given that most exam or essay questions are not questions but instructions; questions generally start with what, when, where, why, who, how etc and not verbs such as discuss, investigate, analyse, compare, describe, assess, clarify, evaluate, examine, identify, outline etc the responses were consistently very average; if you were to scale them most would fall into the ‘C’ range. So; no advantage to be had here. This then led to ‘why does this happen’ and to understand that I realised i had to look at ‘how’ this all happens. That led to looking at decoding and encoding text.

Machines, so it appears do not ‘read’ text as we do. Whilst the eyes and brain are involved in a decoding and encoding of the letter shapes and their relationships, machine learning involves encoding text something like this;

‘\x74\x27\x73\x20\x62\x65\x65\x6e\x20\x61\x20\x77\x68\x69\x6c\x65\x2c\x20\x61\x20\x77\x68\x6f\x6c\x65\x20\x79\x65\x61\x72\x20\x6e\x65\x61\x72\x6c\x79\x20\x73\x69\x6e\x63\x65\x20\x70\x6f\x73\x74\x69\x6e\x67\x20\x74\x6f\x20\x74\x68\x69\x73\x20\x73\x69\x74\x65\x2e\x20\x41\x20\x6c\x6f\x74\x20\x68\x61\x73\x20\x68\x61\x70\x70\x65\x6e\x65\x64\x20\x73\x6f\x6d\x65\x20\x6f\x66\x20\x77\x68\x69\x63\x68\x20\x69\x73\x20\x77\x6f\x72\x74\x68\x20\x74\x61\x6c\x6b\x69\x6e\x67\x20\x61\x62\x6f\x75\x74\x2e\x20\x49\x74\x27\x73\x20\x62\x65\x65\x6e\x20\x74\x68\x65\x20\x79\x65\x61\x72\x20\x6f\x66\x20\x67\x65\x6e\x65\x72\x61\x74\x69\x76\x65\x20\x69\x6d\x61\x67\x65\x20\x6d\x61\x6b\x69\x6e\x67\x20\x61\x6e\x64\x20\x43\x68\x61\x74\x47\x50\x54\x2e\x20\x4d\x79\x74\x68\x73\x20\x61\x6e\x64\x20\x6c\x65\x67\x65\x6e\x64\x73\x20\x61\x62\x6f\x75\x6e\x64\x20\x61\x6e\x64\x20\x66\x6f\x72\x20\x6d\x65\x20\x69\x74\x20\x77\x61\x73\x20\x61\x20\x79\x65\x61\x72\x20\x74\x6f\x20\x64\x6f\x20\x73\x6f\x6d\x65\x20\x6d\x79\x74\x68\x20\x62\x75\x73\x74\x69\x6e\x67\x2e\x20’

The above is the first paragraph of this post encoded in UTF-8 Hex code

Encoded in UTF-32 it looks like this; u+00000074u+00000027u+00000073u+00000020u+00000062u+00000065u+00000065u+0000006eu+00000020u+00000061u+00000020u+00000077u+00000068u+00000069u+0000006cu+00000065u+0000002cu+00000020u+00000061u+00000020u+00000077u+00000068u+0000006fu+0000006cu+00000065u+00000020u+00000079u+00000065u+00000061u+00000072u+00000020u+0000006eu+00000065u+00000061u+00000072u+0000006cu+00000079u+00000020u+00000073u+00000069u+0000006eu+00000063u+00000065u+00000020u+00000070u+0000006fu+00000073u+00000074u+00000069u+0000006eu+00000067u+00000020u+00000074u+0000006fu+00000020u+00000074u+00000068u+00000069u+00000073u+00000020u+00000073u+00000069u+00000074u+00000065u+0000002eu+00000020u+00000041u+00000020u+0000006cu+0000006fu+00000074u+00000020u+00000068u+00000061u+00000073u+00000020u+00000068u+00000061u+00000070u+00000070u+00000065u+0000006eu+00000065u+00000064u+00000020u+00000073u+0000006fu+0000006du+00000065u+00000020u+0000006fu+00000066u+00000020u+00000077u+00000068u+00000069u+00000063u+00000068u+00000020u+00000069u+00000073u+00000020u+00000077u+0000006fu+00000072u+00000074u+00000068u+00000020u+00000074u+00000061u+0000006cu+0000006bu+00000069u+0000006eu+00000067u+00000020u+00000061u+00000062u+0000006fu+00000075u+00000074u+0000002eu+00000020u+00000049u+00000074u+00000027u+00000073u+00000020u+00000062u+00000065u+00000065u+00…………etc, etc

So when a prompt is entered into ChatGPT it is first encoded so that it can be read. The response from ChatGPT is likewise scripted in machine language and then decoded into text. How does it work?

It’s primarily a predictive model, that predicts sequences based on learnings from ‘other encodings’, because that’s what the neural network reads. When this is understood a lot of the ‘myth-understandings’ about machine learning are to some degree dissolved. The better the quality of the language structure of the texts that neural networks are trained on the better the likelihood of a cohesive albeit somewhat standardised, (bearing in mind the encoding and decoding sequence) response.

to be continued @ STAGESIX